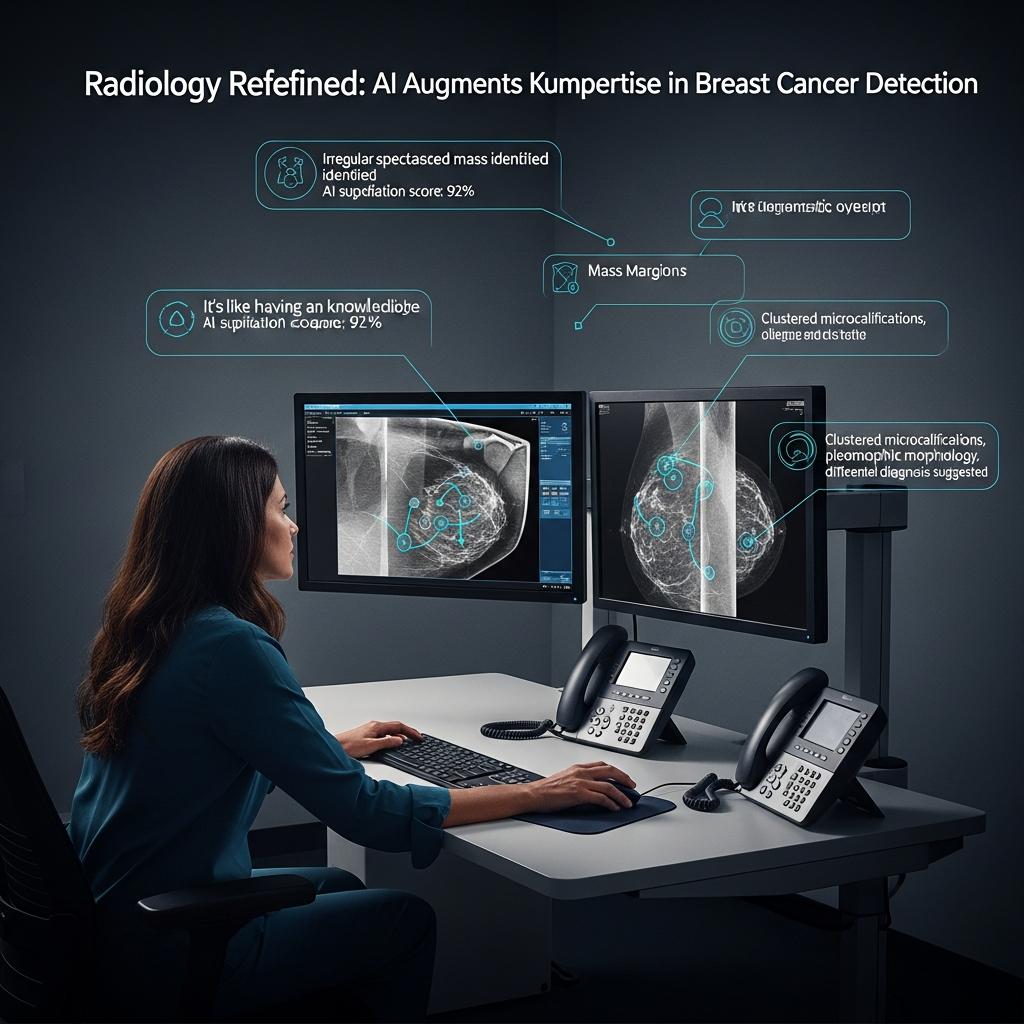

The integration of artificial intelligence into healthcare promises a revolution in diagnostics, especially for critical areas like cancer detection. New research from Stevens Institute of Technology offers a pivotal insight: AI can indeed sharpen doctors’ breast cancer image diagnoses. However, this powerful alliance hinges on a crucial condition – AI explanations must be meticulously designed to support clinicians without causing cognitive overload. This isn’t just about adding AI; it’s about adding smart AI.

This understanding is paramount as AI systems increasingly analyze X-rays, MRIs, and CT scans, identifying subtle patterns that human eyes might miss. With unparalleled speed and endurance, AI sifts through vast medical datasets. Yet, despite its potential, many clinicians remain wary. The primary concern? AI’s “black box” nature, where predictions arrive without transparent explanations. This lack of clarity erodes trust, a cornerstone of effective clinical decision-making.

The Nuance of AI Explainability in Medical Imaging

The Stevens Institute of Technology study, led by associate professor Onur Asan, investigated how different levels of AI explanations influence diagnostic accuracy and trust among oncologists and radiologists. The findings challenge the intuitive belief that “more explanation is always better.” Researchers observed 28 clinicians using an AI system to analyze breast cancer images. While all participants received AI-generated assessments, some were given additional layers of detail about the AI’s reasoning.

Balancing Transparency and Cognitive Load

A key revelation was that overly detailed or complex explanations proved counterproductive. When clinicians spent more time processing elaborate AI rationales, their attention was diverted from the images themselves. This increased cognitive load, slowed decision-making, and, in some cases, led to more diagnostic mistakes. As Asan emphasized, adding unnecessary information burdens clinicians, potentially impacting patient safety. The goal isn’t just explainability, but effective explainability – providing enough insight to build trust without creating mental fatigue.

This concept resonates with broader principles of information processing and learning. Just as students with ADHD benefit from strategic, active learning methods over passive, prolonged study sessions, clinicians need AI tools that facilitate smart, efficient information absorption. Overloading with data, even if relevant, can hinder rather than help, turning a powerful assistant into a distracting hindrance. The aim should be to streamline the physician’s mental workflow, allowing them to focus their human expertise where it’s most needed.

The Double-Edged Sword of Trust and Overconfidence

The studies also unveiled a different kind of risk: overconfidence in AI. In certain scenarios, clinicians developed such strong trust in the system’s output that they were less likely to critically scrutinize results, even when the AI made errors. This “blind trust” can be dangerous, as unchecked AI suggestions could lead to misdiagnoses or suboptimal patient care. Effective human-AI collaboration requires a healthy skepticism, not unquestioning acceptance.

This phenomenon highlights a crucial design challenge for the next generation of medical AI: building tools that are transparent enough to be trustworthy, yet simple and intuitive enough to be seamlessly integrated into the high-pressure environment of clinical care. The integration of advanced AI, such as that seen in emerging digital health devices like AI-powered smart glasses, also grapples with these issues – balancing sophisticated functionality with user experience, data privacy, and the potential for over-reliance.

Designing AI for Enhanced Clinical Decisions

The research underscores that merely integrating AI into the reading room is insufficient. To truly improve care and boost diagnostic accuracy, systems must be designed with clinicians’ cognitive limits and workflows firmly in mind. This involves a thoughtful approach to Explainable AI (XAI), ensuring that explanations support, rather than overwhelm, human judgment.

Essential Design Principles for Medical AI

- Context-Aware Explanations: AI should provide explanations tailored to the clinician’s specific need and the clinical context. Generic, verbose explanations are less effective than concise, actionable insights that highlight key features or patterns.

- Interactive Transparency: Instead of static explanations, future AI systems could offer interactive capabilities, allowing clinicians to probe deeper into the AI’s reasoning on demand. This empowers active learning and critical engagement, much like effective study techniques that encourage querying and self-testing.

- Minimizing Cognitive Load: Designers must prioritize simplicity and intuitiveness. This means avoiding excessive data presentation and focusing on presenting the most salient information clearly and concisely. The goal is to augment, not complicate, existing clinical processes.

- Integration with Workflow: AI tools should fit naturally into existing clinical workflows, minimizing disruption and maximizing ease of use. If a tool feels cumbersome, even its perceived usefulness will be overshadowed.

The Critical Role of Training and Human Expertise

Beyond design, comprehensive training is paramount. Clinicians who use AI must be educated to interpret AI outputs critically. They need to understand the AI’s strengths and limitations, recognizing it as a powerful assistant, not a replacement for their expertise. This training should emphasize analytical interpretation over blind acceptance, fostering a collaborative mindset where human intuition and AI insights merge for superior patient safety outcomes.

The journey towards effective AI explanations in healthcare reflects broader principles of technology adoption. Tools are most likely to be embraced when they are perceived as both useful and easy to use. For doctors, an AI system that clearly enhances their ability to make accurate diagnoses without adding undue stress or complexity will become an invaluable partner.

The Broader Impact on Patient Care

For patients, the stakes of accurate and early detection in diseases like breast cancer are incredibly high. AI holds immense promise for earlier tumor identification, reducing missed diagnoses, and standardizing care across diverse clinical settings. This has the potential to dramatically improve outcomes for millions worldwide.

Studies like the one from Stevens Institute of Technology provide a crucial roadmap for the future of medical imaging and clinical decisions powered by AI. They remind us to build tools that amplify human judgment, avoid overloading already stretched clinicians, and always keep patient safety at the absolute center of every design choice. As AI continues to evolve, its true value will be measured not just by its computational power, but by its ability to intelligently and intuitively empower the human experts who wield it.

Frequently Asked Questions

How can AI explanations improve cancer diagnosis without overwhelming doctors?

AI explanations can enhance cancer diagnosis by providing doctors with targeted, concise insights into the AI’s reasoning, highlighting critical features or patterns in medical images. The key is intelligent design that avoids excessive detail, which can increase cognitive load and lead to errors. By offering just enough transparency to build trust and facilitate critical thinking, AI serves as an effective assistant, allowing clinicians to efficiently integrate AI insights into their diagnostic process without being distracted or overwhelmed.

What key design principles should guide the development of AI tools for medical imaging?

Designing effective AI tools for medical imaging requires adherence to principles such as context-aware explanations, interactive transparency, minimization of cognitive load, and seamless integration with existing clinical workflows. This means providing explanations tailored to specific needs, allowing clinicians to explore AI reasoning on demand, presenting information clearly and concisely, and ensuring the tools are intuitive and easy to use within a high-pressure clinical environment. Such design ensures AI supports, rather than hinders, human expertise.

What are the risks of over-reliance on AI in clinical decision-making, and how can they be mitigated?

A significant risk of over-reliance on AI is “blind trust,” where clinicians might accept AI outputs without sufficient scrutiny, potentially leading to misdiagnoses, even when the AI is flawed. This can compromise patient safety. Mitigation strategies include robust training for clinicians on critically interpreting AI results, emphasizing AI as an assistant rather than a replacement for human judgment, and designing AI systems that prompt critical review rather than passive acceptance. Fostering a mindset of healthy skepticism and continuous professional development is crucial to ensure responsible AI integration.