Meta Platforms’ AI chatbot, Meta AI, launched in April, features a public “Discover” feed that is displaying user conversations containing sensitive and highly personal information. This functionality, intended to showcase how people interact with the AI, is inadvertently exposing private details to public view, raising significant privacy concerns.

Within the Meta AI app, the “Discover” tab presents a timeline of users’ interactions with the chatbot. Similarly, the Meta AI website features an extensive collage of these conversations. While some displayed queries are innocuous, such as requests for recipes or trip itineraries, a disturbing number reveal intimate, confidential, and potentially identifying details tied to user names and profile photos.

Examples of Exposed Personal Data

The range of sensitive information appearing publicly is alarming. Instances include:

Medical Details: Users have shared struggles with physical ailments, asked for advice on rashes or hives, divulged specifics about surgical procedures including age and occupation, and discussed other health-related issues.

Legal and Financial Matters: Conversations reveal users seeking help with terminating residential tenancies, drafting academic warning notices that include school names and personal details, inquiring about potential corporate tax fraud liability linked to specific locations, and even asking the AI to develop character statements for court cases, providing personally identifiable information about both the user and others involved.

Deeply Personal Confessions: Users have been observed sharing details about relationship issues, private affairs, and highly personal desires, including specific questions about dating preferences linked to age and location. One notable example involved a 66-year-old single man openly asking the AI about countries where younger women prefer older men, detailing his location and willingness to relocate.

Many of these public posts appear linked to users’ public Instagram profiles, sometimes displaying full names and profile photos, making the exposed information easily attributable.

Why is This Happening? User Misunderstanding or Design?

Privacy experts are sounding the alarm. Calli Schroeder, senior counsel for the Electronic Privacy Information Center (EPIC), described the public display of medical, mental health, home address, and legal information as “incredibly concerning.” She suggests it highlights a fundamental misunderstanding among users about how these chatbots function, their intended use, and the nature of privacy within these systems.

Schroeder emphasizes a critical point users may be overlooking: “Nothing you put into an AI is confidential.” She warns that this information doesn’t stay solely between the user and the app; it goes “to other people, at the very least to Meta.”

While Meta spokesperson Daniel Roberts stated that chats are private by default and only become public if users explicitly choose to share them through a multistep process on the Discover feed, the presence of so much sensitive data contradicts this assurance in practice. Critics speculate that the sharing feature itself might be confusing or perceived as a “dark pattern.” Framed by Meta as a way to “discover all sorts of fun ways that people are creating stuff with Meta AI,” users might inadvertently share private interactions, not realizing the public nature of the Discover feed. Some observers suggest that individuals less familiar with nuanced sharing controls, potentially including older users, may be particularly susceptible to this unintended exposure.

Despite Meta’s claim that sharing requires explicit user action, the company has not publicly detailed specific mitigations in place to prevent or address the sharing of personally identifiable information (PII) once a chat is posted to the Discover feed.

Ongoing Concerns Amidst Rapid AI Deployment

It remains unclear whether users are fully aware that their conversations are public, or if some of the public posts are deliberate trolling attempts following media reports on the issue. What is clear is that sharing is not the default setting for Meta AI chats.

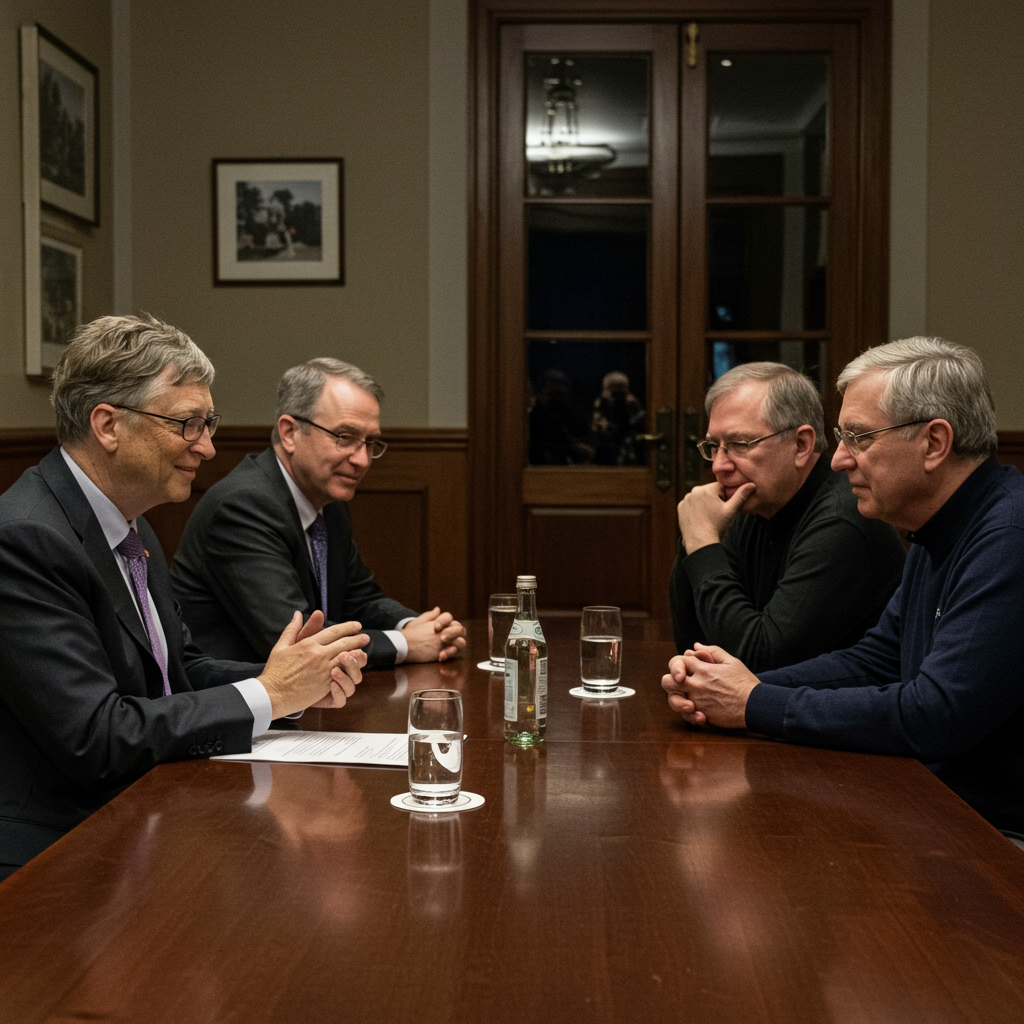

The rapid pace of AI development and deployment continues, even as critics have flagged potential privacy issues with Meta AI since its launch, with one headline calling it “a privacy disaster waiting to happen.” Meta CEO Mark Zuckerberg recently announced Meta AI has reached 1 billion users across the company’s platforms, underscoring the massive scale of this technology.

Even Meta’s own chatbot, when asked on the public feed about the company’s awareness of sensitive information being made public, acknowledged that “Some users might unintentionally share sensitive info due to misunderstandings about platform defaults or changes in settings over time,” framing it as an “ongoing challenge.”

The exposure of deeply personal and sensitive user data on Meta AI’s public feed, likely stemming from a combination of user misunderstanding and potentially confusing interface design, poses a significant privacy risk that runs counter to typical user expectations when interacting with a private chatbot assistant. While Meta aims to make its AI the “most useful assistant in the world,” the public exposure of private information raises serious questions about user data handling and privacy safeguards in the rush to deploy AI at scale.

(Note: While other external research mentioned Meta using user content for AI training, this article focuses specifically on the privacy issue of user-generated content being publicly displayed on the Discover feed, as per the core topic of the original article.)*