A startling incident involving Meta AI glasses has recently underscored the escalating tension between cutting-edge wearable technology and established privacy safeguards within a courtroom setting. During a high-profile Los Angeles trial, members of Mark Zuckerberg’s team faced a stern contempt of court warning for wearing Meta’s camera-equipped Ray-Ban smart glasses in a no-recording environment. This courtroom drama is just one facet of Meta’s growing legal and ethical challenges, revealing deeper issues surrounding digital surveillance, AI accountability, and the impact of social media on mental health.

The Courtroom Clash: AI Glasses Meet Judicial Authority

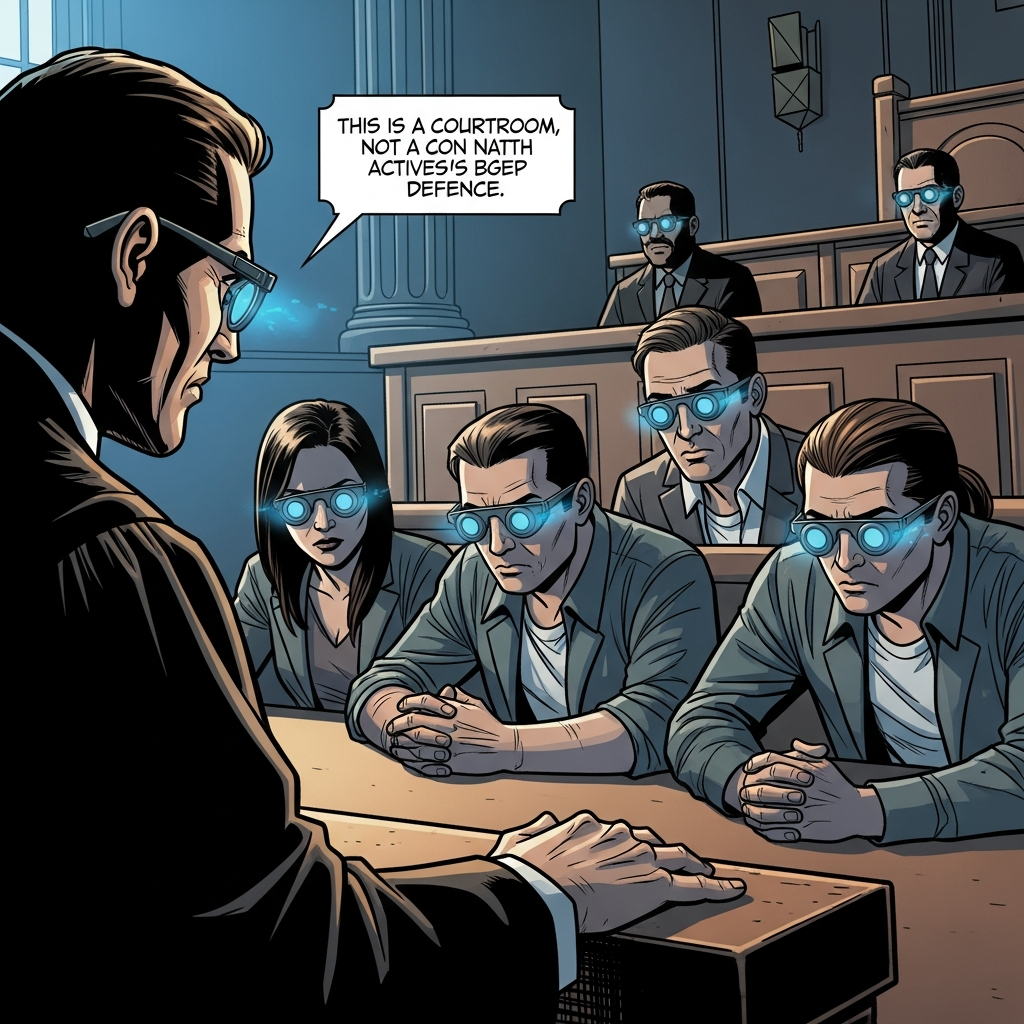

The scene unfolded dramatically in a Los Angeles courthouse. As Meta CEO Mark Zuckerberg’s entourage entered, Judge Carolyn Kuhl quickly spotted the Ray-Ban smart glasses, which resemble ordinary eyewear but pack sophisticated recording capabilities. Her immediate response was unequivocal: remove the devices or face contempt of court charges. This swift judicial intervention, unusual for consumer tech, highlighted the gravity of the situation. Despite the judge’s clear directive, reports noted at least one individual still wearing the Meta AI glasses in a courthouse hallway near jurors, claiming the device wasn’t recording.

The core problem, as the incident acutely demonstrates, is the lack of a reliable method to verify whether a smart glass wearer is actively recording. While Meta’s latest Ray-Ban smart glasses, launched in 2023, feature a small LED indicator light to signal recording, critics argue it’s too subtle and easily overlooked, rendering it ineffective in many real-world scenarios. This incident provided immediate ammunition for privacy advocates, who consistently warn that the normalization of hidden cameras creates pervasive surveillance risks.

The Broader Battle for Privacy in a Wearable World

The courtroom confrontation is a potent symbol of a much wider conflict. Legal institutions have enforced strict recording bans for decades to protect jury integrity and ensure fair trials. These rules were designed for overt cameras and smartphones, not for devices virtually indistinguishable from regular prescription glasses. This struggle is not unique to courts; schools, hospitals, and gyms face similar dilemmas. Some venues have resorted to blanket bans on smart glasses, yet effective enforcement without intrusive searches remains nearly impossible.

The trend toward wearable AI is accelerating, with tech giants like Apple, Amazon (Echo Frames), and even Google reportedly developing or already selling their own smart glasses. This industry-wide push suggests that institutional resistance, as seen in Judge Kuhl’s courtroom, is merely the beginning. The incident brings power dynamics to the forefront, questioning who truly sets the rules when a major tech CEO’s team appears in court with devices designed to blur privacy boundaries. Judge Kuhl’s firm response established judicial authority, but the broader societal debate over ambient recording is far from resolved. Privacy researchers caution that once cameras become invisible and ubiquitous, the fundamental social contract regarding consent and recording will be irrevocably altered.

AI’s Double-Edged Sword: Identity and Impersonation Failures

Beyond the public spectacle of Meta AI glasses in court, Meta’s artificial intelligence systems face scrutiny from another unexpected quarter: a lawyer named Mark S. Zuckerberg. This Indianapolis attorney has filed a lawsuit against Meta, alleging repeated account suspensions and false accusations of impersonating its CEO, Mark E. Zuckerberg. For 14 years, this shared name has plagued the lawyer, causing professional disruption and financial losses.

Meta’s automated systems consistently flagged his accounts as fraudulent, especially when running ads for his law practice. Despite uploading personal documents, including his driver’s license and credit card details, and even video footage of his face, his accounts were repeatedly shut down. These suspensions sometimes lasted for months, during which Meta allegedly continued to accept his advertising payments, prompting understandable frustration. This case highlights a significant flaw in Meta’s AI-driven identity verification processes, demonstrating how even a clear middle initial distinction can lead to persistent personal and professional harm. The incident with the lawyer underscores the current limitations of AI when dealing with nuanced real-world identities, impacting both individual privacy and professional conduct.

The Looming Threat: Facial Recognition and “Name Tag”

Adding another layer to the privacy concerns, Meta is reportedly planning a “terrifying” new facial recognition upgrade, dubbed “Name Tag,” for its Ray-Ban Meta smart glasses. Expected to roll out in late 2026, this feature would allow wearers to identify individuals around them and access information using the glasses’ built-in AI assistant. Imagine asking, “Meta, tell me who this person is,” and getting an instant ID.

This planned functionality raises profound ethical questions. It would effectively involve individuals who have made efforts to protect their privacy by simply being scanned or appearing in the background of a smart glass user’s field of vision. Critics argue that widespread facial recognition constitutes an egregious breach of privacy because those being observed cannot consent or opt out. The technology itself is not without flaws; a New York transport authority pilot program reportedly experienced a “100% error rate.” Furthermore, it’s critiqued for inherent bias, susceptibility to manipulation, and potential for false arrests. Concerns also exist that Meta might already be using photos captured by smart glasses, including those of unwitting passersby, to train its AI without explicit consent. Meta has a history of pursuing and then retreating from similar facial recognition features due to ethical and technical hurdles, but this persistent pursuit signals a clear ambition to integrate more intrusive AI into its wearable tech.

Meta’s Legal Crucible: The Social Media Addiction Trial

The courtroom where the Meta AI glasses incident occurred is also the stage for a landmark trial against Meta concerning social media addiction. Mark Zuckerberg’s testimony here marks his first appearance before a jury to address these grave concerns, which could profoundly impact the tech industry. This bellwether trial, involving a 20-year-old woman identified as Kaley G.M., alleges that her social media use throughout childhood adversely affected her mental health, leading to depression and suicidal thoughts.

Kaley’s lawyers contend that social media platforms are “by design” detrimental, accusing companies of “engineered addiction.” This case is a bellwether for over 2,000 similar personal injury lawsuits and more than 100,000 individual arbitration demands. Parents like Lori Schott, who lost her daughter to suicide, and Amy Neville, are vocal at the courthouse, hoping to “turn the tide” against Big Tech. Meta has acknowledged the potential financial repercussions, warning investors that these legal battles could “significantly impact” its 2026 financial results, with damages potentially reaching “high tens of billions of dollars.”

Challenging Section 230 and Family Tragedies

A crucial legal aspect of these cases involves challenging Section 230, a law that typically shields online platforms from liability for user-generated content. Plaintiffs are successfully arguing that this protection does not extend to liability stemming from a platform’s design features—such as algorithms, notifications, and infinite scrolling—which they allege contribute to a mental health crisis among teenagers.

The trial brings to light heartbreaking stories from numerous families:

Annalee Schott died by suicide at 18, her mother noting algorithms pushing content related to anxiety and depression.

Mason Bogard died at 15 after a viral choking challenge on YouTube.

Jordan DeMay died by suicide after being blackmailed through an international sextortion ring on Instagram.

Selena Rodriquez died by suicide at 11, her family describing extreme addiction and violent outbursts when her phone was removed.

- Englyn Roberts died by suicide at 14 after mimicking a video seen on Instagram.

- www.aol.com

- www.techbuzz.ai

- www.aol.com

- www.aol.com

- www.aol.com

These personal tragedies underscore the growing demand for tech accountability. Parents and advocates argue that companies like Meta prioritize profit over children’s safety, necessitating congressional action and stricter regulations.

The Unresolved Conflict: Innovation vs. Regulation

The convergence of these events—the courtroom defiance with Meta AI glasses, the identity crisis triggered by flawed AI, and the monumental social media addiction trial—paints a clear picture. Meta’s rapid technological innovation, particularly in AI and wearable tech, has far outpaced society’s ability to create adequate rules and regulations to govern it. This gap leaves users vulnerable and institutions struggling to maintain fundamental protections like privacy and safety. The ongoing legal battles represent a crucial, albeit belated, effort to establish accountability and redefine the boundaries of what is acceptable in the digital age.

Frequently Asked Questions

Why were Meta AI glasses banned in court?

Meta’s Ray-Ban smart glasses were banned in a Los Angeles courtroom because they are equipped with cameras capable of capturing photos, video, and livestreaming, posing a significant risk to courtroom privacy and security. Courts enforce strict no-recording rules to safeguard jury integrity and witness safety. The small LED indicator designed to signal recording is deemed insufficient and easily overlooked, making it impossible to reliably verify whether the glasses are actively recording or not. This inherent ambiguity prompted Judge Kuhl to issue a stern contempt of court warning.

What are the major legal challenges Meta currently faces?

Meta faces several significant legal challenges. Firstly, the company is embroiled in a landmark bellwether trial in Los Angeles concerning social media addiction, where plaintiffs allege platforms are “by design” detrimental to youth mental health and contribute to depression and suicidal thoughts. This trial could set a precedent for over 2,000 similar lawsuits. Secondly, Meta faces a lawsuit from lawyer Mark S. Zuckerberg, who alleges Meta’s AI systems falsely accuse him of impersonation due to his shared name with the CEO, leading to account suspensions and financial losses. Lastly, the planned “Name Tag” facial recognition feature for Meta’s smart glasses raises substantial future privacy and ethical legal concerns.

How can individuals protect their privacy in an age of pervasive smart tech?

Protecting privacy in an era of advanced wearable tech requires vigilance and proactive steps. Individuals should be aware of the capabilities of devices like Meta AI glasses and other smart eyewear, recognizing that recording might be occurring discreetly in public and private spaces. When in privacy-sensitive environments, observe posted rules regarding electronic devices. Consider advocating for stronger privacy regulations and corporate accountability for AI systems. On a personal level, review the privacy settings of your own social media and smart devices, limit the sharing of personal information, and be cautious about giving consent for data usage, especially concerning facial recognition and AI training.

Conclusion

The incidents surrounding Mark Zuckerberg, Meta’s AI systems, and its smart glasses reveal a tech giant at the nexus of innovation and profound societal challenges. From courtroom contempt warnings sparked by Meta AI glasses to a landmark trial on social media addiction and a lawsuit over AI-driven identity errors, the company is under immense pressure. These events collectively highlight the urgent need for robust regulatory frameworks that can keep pace with rapid technological advancements, ensuring that privacy, user safety, and ethical considerations are not merely afterthoughts. As wearable AI becomes more prevalent, the societal debate over digital surveillance and accountability will only intensify, shaping the future of our digital interactions and fundamental rights.